Bom dia! Reporting from Lisbon, this is CityNomix.

For someone immersed in digital marketing, sensing annual trends firsthand is as vital as breathing oxygen. There is a world of difference between watching a keynote on a screen and soaking up information amidst the palpable energy of a live venue. The “noise,” the collective gasps of the audience, and the vibrant atmosphere of the city itself create a context that video streams simply cannot convey.

Today, I’m bringing you a report straight from Lisbon, Portugal, where I attended Web Summit 2025—one of the world’s largest technology conferences. Specifically, I want to share the insights that profoundly stimulated my intellectual curiosity from Qualcomm’s keynote speech.

The theme was “Qualcomm’s Vision for the Future of AI.”

This wasn’t just about the spec wars. It was a glimpse into how our lives and the relationship between humans and digital entities are about to fundamentally shift.

The Breeze of Lisbon and the Frenzy of Web Summit

November in Lisbon was surprisingly mild. On my way to the venue, the MEO Arena, I strolled along the Alameda dos Oceanos. This area, once the site of a World Expo, blends modern architecture with waterfront scenery—a fitting backdrop for discussing the future.

Walking along the wooden boardwalk beneath trees just beginning to turn autumnal colors was rhythmic and soothing. Along the water channel decorated with colorful tiles, tech enthusiasts and entrepreneurs from around the globe were heading to the venue, discussing the future in a myriad of languages. This time to “walk, shoot, and feel” is the essence of Photomo and serves as a warm-up to sharpen my resolution for the information ahead.

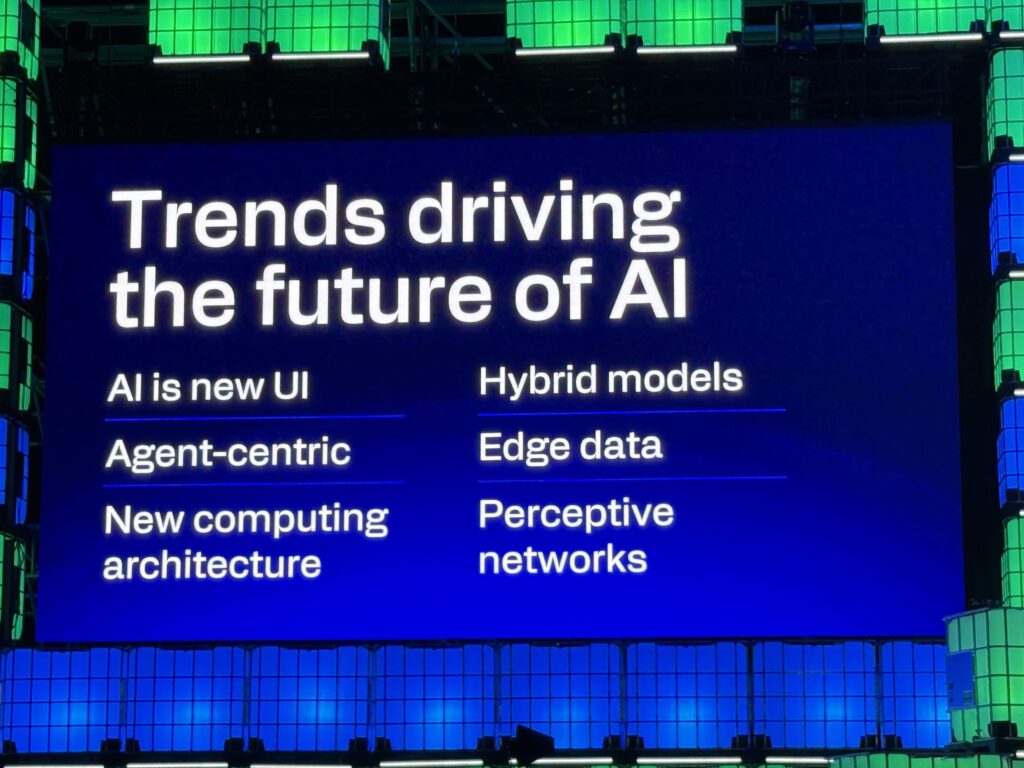

Inside the arena, the excitement was already swirling. Then came the highlight of Day 2: Qualcomm President & CEO, Cristiano Amon, took the stage.

Sitting on a stool on stage, with the words “-centered design in the age of AI” looming on the giant screen behind him, Amon began to speak. His topic was not the miniaturization of semiconductors, but a grand vision of how AI can become the “New UI” (User Interface).

Qualcomm’s Six Trends Driving the Future of AI

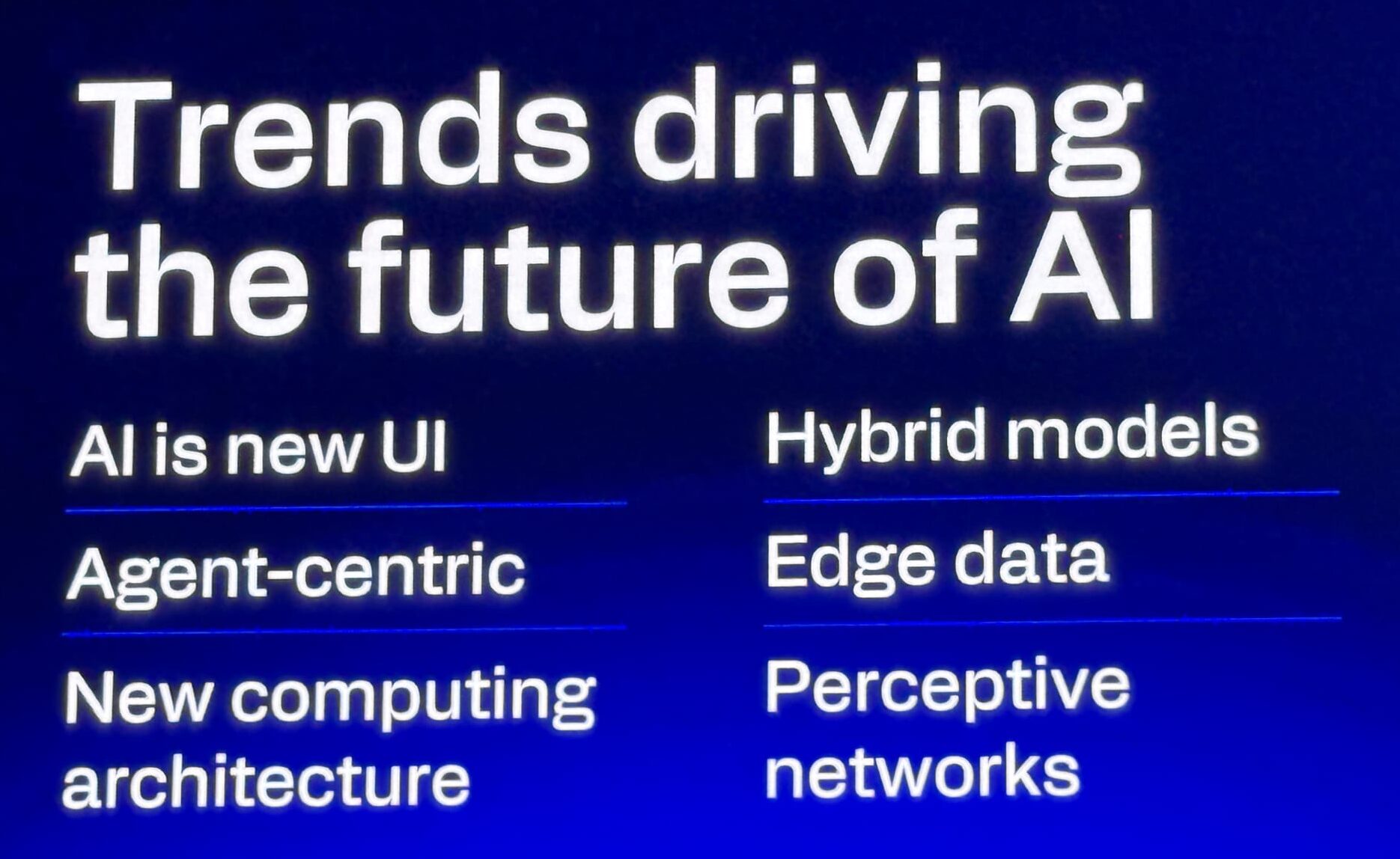

The slides Amon presented were incredibly suggestive. He outlined six key trends driving the future of AI:

- AI is new UI

- Agent-centric

- New computing architecture

- Hybrid models

- Edge data

- Perceptive networks

At first glance, this might look like a list of buzzwords. However, unraveling them reveals the keys to resolving the frustrations we feel with AI daily. I was particularly struck by the concept of “Agent-centric” and the infrastructure required to support it.

Unpacking the Meaning of Agent-Centric AI: From Tool to Partner

The keyword that resonated most with me during this keynote was “Agent-centric.” But what exactly is the Agent-centric AI meaning?

Have you ever used generative AI and thought:

“I wish it understood me better.”

“I just told you that a minute ago.”

“Why do I have to explain the context of this image? It should just know.”

Most current AI operates as a “passive tool” that functions only when given a precise prompt. However, the agent-centric future Qualcomm envisions is one where AI evolves into an “active partner.” It autonomously understands the user’s context and acts proactively.

For instance, if I mutter, “I’m hungry,” a traditional AI might ask, “Shall I search for nearby restaurants?” An agent-centric AI, however, would integrate my past behavioral data, current location, blood sugar or heart rate data from my wearable device, and even my recent schedule (perhaps knowing I’m exhausted from meetings). It might then suggest, “You seem tired. Shall I book that quiet Portuguese restaurant you like? It’s only a five-minute walk from here.”

In essence, the Agent-centric AI meaning revolves around an AI that “senses” needs the user hasn’t even verbalized, deriving insights from vast amounts of context like daily behavior and biometric data. It is, effectively, more capable than a top-tier executive assistant.

Multimodal: The Key to a “Sensing” AI

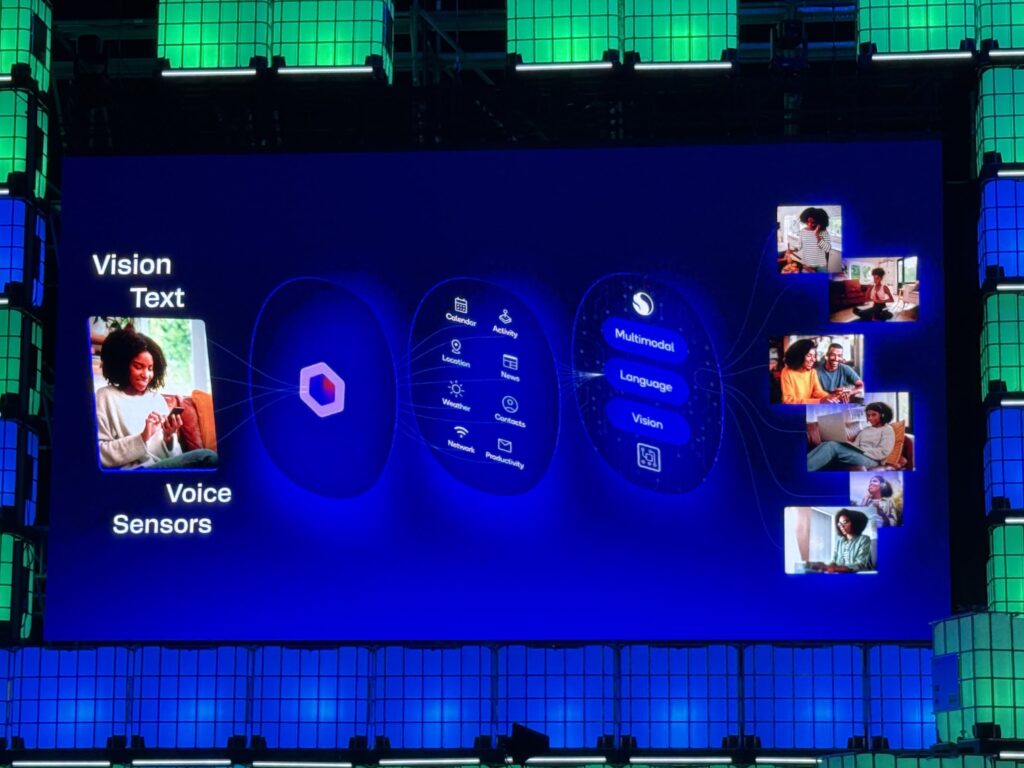

To achieve this “sensing” capability, diversifying input formats—or “multimodal” processing is essential.

Humans don’t communicate solely through text. We use vision, voice, and atmosphere to understand one another. Qualcomm’s slides illustrated how these diverse inputs are integrated with personal data like calendars and location, then optimized for user life scenarios such as cooking, working, or yoga.

Text is precise but tedious to input. Images convey a lot of information instantly but can be open to interpretation. Combining these allows AI to drastically improve its understanding.

Hybrid Models: The Happy Marriage of Cloud and Edge

But a problem arises here. Should we send all this massive, private data to the cloud (the other side of the internet) for processing? There are security concerns, and latency is unacceptable for real-time assistance.

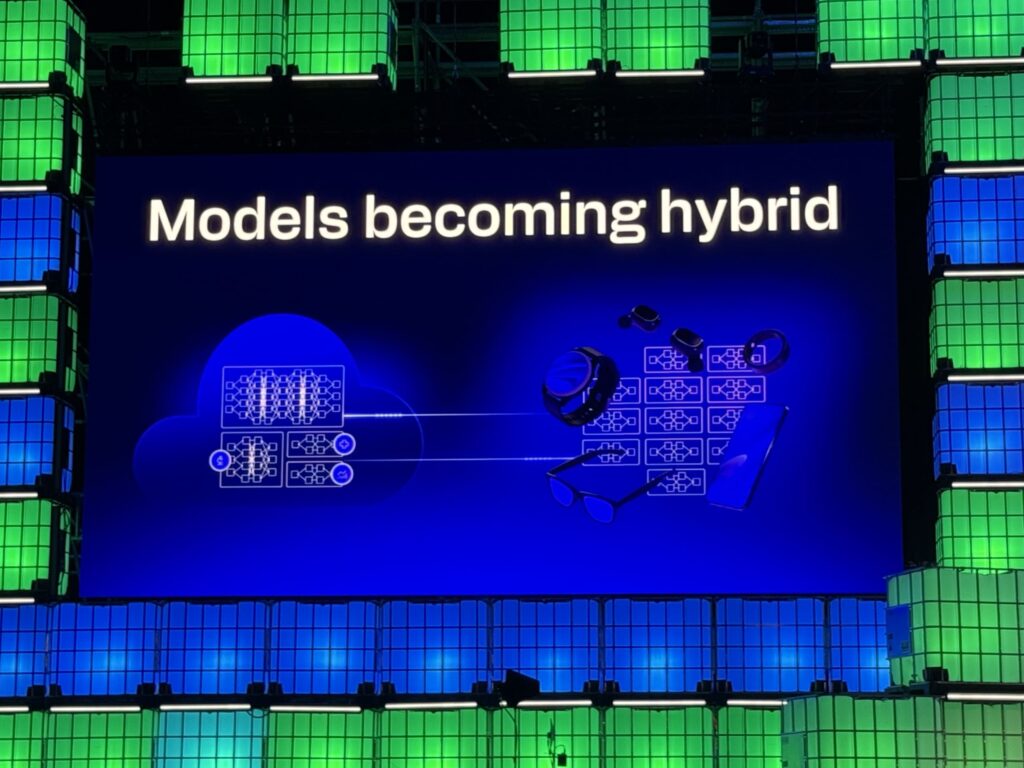

This is where “Hybrid models” come into play.

As the slide indicates, AI processing power is being distributed not just to the cloud, but also to the devices in our hands (smartphones, smartwatches, smart glasses). This is called “On-device AI” or “Edge AI.”

I interpret this as similar to the relationship between the human brain (Cloud) and reflexes (Edge). Instant judgments and privacy-sensitive processing happen on the device (reflexes), while the cloud (brain) is consulted only for tasks requiring massive computational power. Qualcomm emphasized that this “hybrid” approach is key to a real-time, secure AI experience.

6G and Perceptive Networks: The Space Itself Becomes a Sensor

The conversation then moved further into the future: “Perceptive networks” and 6G.

Amon described 6G as a network designed specifically for AI usage. What fascinated me was the idea that the 6G network itself could function as a sensor. By utilizing radio frequencies from base stations, the network could accurately track the position of objects and human movements in a space without relying on cameras or wearable devices.

This sounds a bit sci-fi, and from a privacy perspective, perhaps a little “scary.” Amon himself seemed to choose his words carefully regarding the potential and sensitivity of this technology.

However, if implemented safely, this means AI could understand our situation (e.g., someone has fallen, is running, or is in the room) even without wearables, allowing for emergency support or environmental control. It’s a state where “the space knows you.” This could lead to the ultimate understanding of context.

Wearable Evolution and the Qualcomm Ecosystem

To realize this future, Qualcomm is pouring resources into smart glasses and XR devices.

Meta, RayNeo, XREAL, Rokid… The number of partner companies displayed on the slide was staggering. We are approaching an era of “AI eyewear,” where we don’t need to take out a smartphone; our very field of vision becomes the information interface, and everything we see becomes input for the AI.

Furthermore, AI application is not limited to the personal sphere.

From robotics to autonomous driving and industrial infrastructure, a shift towards “Autonomous AI”—where AI judges and acts independently—is progressing across all sectors.

CityNomix’s Perspective: How Should We Prepare?

Listening to Qualcomm’s presentation at Web Summit convinced me of one thing. In future digital marketing and our lifestyles, the critical skill will not just be “commanding” AI, but “building a partnership” where we share context with it.

Practical Takeaways:

- New Criteria for Device Selection: When buying new smartphones, PCs, or wearables, don’t just look at processing speed. Look for “NPU performance” and “On-device AI readiness.” Qualcomm’s Snapdragon-powered devices will likely lead in this regard.

- Understanding the Value of Your Data: To benefit from agent-centric AI, you need to open up some of your data to AI. Developing the literacy to know what to share and what to protect is key to balancing comfort and safety.

- Invest in Wearables: Smartwatches and smart glasses are evolving from notification devices into “input terminals” for AI. Getting into the habit of digitizing your biometric data now, perhaps starting with a smartwatch, will ensure you smoothly receive the benefits when AI agents become more precise.

Conclusion: With the Lisbon Sunset

Leaving the MEO Arena, the setting sun was casting a glow over the Tagus River. Technology like AI can sometimes feel cold and inorganic, but I felt that Qualcomm is aiming for a future where technology grasps human intent and provides warm, supportive assistance.

“AI is new UI.”

A magical daily life where you don’t type, but simply speak—or even just think—and the world responds, is just around the corner.

Lisbon’s historic streets and cutting-edge technology. With the inspiration gained from this place where past and future intersect, I am ready to walk to the next city. I encourage you all to touch new devices and services while keeping in mind the Agent-centric AI meaning. The scenery will surely look different.

Until next time, Até logo!